There is no magic pill. Not even Causal AI.

Listen to the Audio Version here

In the 1990s, 'Fen-Phen' became popular as a magic pill. It was used by people desperate to shed pounds quickly without the hard work of diet and exercise. Users reported significant weight loss and Fen-Phen became very popular.

However, the dark side of this quick fix soon became apparent. Reports began to emerge of people developing serious heart and lung problems after using fen-phen. As the evidence mounted, it became clear that the risks of fen-phen far outweighed its benefits.

Because people are lazy, companies are lazy. When we have a problem, we want a quick and easy fix. But great solutions are neither quick nor easy.

"A magic pill is easy to take, works all the time, can be used by anyone and has no side effects"

When it comes to marketing insights, these are the typical "magic pill" claims:

EASY

"Just upload your data and the tool will automatically find the insight you need." That's a marketer's dream. But we are still a long way from it. Data is just numbers. The context and meaning of this data lies mostly outside the data. This is why insight methods are only "easy" in limited, standardised special cases.

EFFECTIVE

"Results are true with certainty". The claim is accompanied by phrases like "results are highly significant". The sad news is that "significance" does not measure "truth". Not at all. It only measures whether the data meet the assumptions of the model. In most cases, the user is not even aware of these assumptions. It is easy to make significant models. In fact, the more meaningless a model is, the easier it is to make it significant. Scientists call this phenomenon "p-hacking".

FOR EVERYBODY

A magic pill tool should be for dummies and provide actionable recommendations. That means no more expert opinion - Automated Marketing Management. That's a big claim.

NO SIDE EFFECT

A magic pill is a no-brainer. Only upsides, no downsides.

I know the '10x Insights' claim can sound like a bowl of magic pills. But it is meant to be a reminder, an inspiration, that 10x is doable, that everything we need is available today. Using what is already available and then orchestrating it in an organisation to create impact is not easy. There is no cookie-cutter approach. You need to be a domain expert.

What about my favourite "pill" - CAUSAL AI?

Is it EASY?

There are causal discovery algorithms that build models entirely from data. But this approach becomes increasingly flawed the more variables are involved. For marketing, there is no professional use for them other than inspiration. The cost of failure is too high. You don't want to gamble with your brand.

We can also use LLMs to make initial assumptions about which variable has a causal effect on which other variable. It speeds up the process and provides an outside view.

However, a human domain expert is needed to validate and fine-tune such an a priori model. It also helps if some marketing science know-how is applied. It helps to know that "satisfaction" is a short-term measure and "loyalty" is a long-term measure.

Is it simple? It is simpler than traditional models. But if you take your job seriously, it is far from being a button press.

Is it EFFECTIVE? Let's talk straight. You don’t know how good a model will be unless you have done the work. To be sure you actually need to run experiments based on the insights. Even then, results could be a coincidence.

The truth is this: Asking whether the model is telling the truth is not the right question. No model, no expert, no AI will ever tell the truth.

"All models are wrong, some are useful" - George Box

The question is "what is the BEST way to gather evidence about what to do". Causal modelling is guided by this question. It seeks to understand the relationship between actions and outcomes by controlling for artefacts that can obscure or bias results - such as multicollinearity, confounders, causal directions and indirect effects. By controlling for everything that can cause error, you arrive at the "best" way to gather evidence about what to do.

It is like betting on a race. Take swimming. When Michael Phelps takes part, it is unknown whether he will break the world record or not. It is enough to know that Michael is most likely to win. Same with Causal AI.

Just choose the best method. When in doubt, use methods that use very different approaches, such as qual and quant, and combine them.

To conclude: Does causal AI deliver the truth? No. In many cases, it is simply the most sensible thing to do.

FOR EVERYONE? Is Causal AI "foolproof"? No. "Fools" would not even use it. Understanding the need for it is already a selection filter. Then the results have to be read in a certain way. It can be difficult for people who are used to reading descriptive and correlative results. It takes a learning curve.

NO SIDE EFFECT: If used incorrectly or in a limited way, Causal AI can produce misleading results. In the early years, when scientists wanted to evaluate our Causal AI software NEUSREL, they took their existing data sets and structural equation models and built a similar model in NEUSREL. As a result, the models showed very limited progress. Such an approach ignores, for example, the confounding effect of situational and contextual factors. It uses a (not very useful) confirmatory research approach with a (useful) exploratory method.

In essence, Causal AI is not a magic pill. There is no such thing. Instead, it requires you to step up and be your own authority. As Albert Einstein said, "Blind faith in authority is the greatest enemy of truth."

“Blind belief in authority is the greatest enemy of truth” - Albert Einstein

It gets even more sobering.

What makes a country prosperous in economic, social or health terms? Econometric science tries to find out, but it has the hardest job in the world. Nothing is more complex than human society. There are millions of theories, but none of them are really good. Marketing science is not much better.

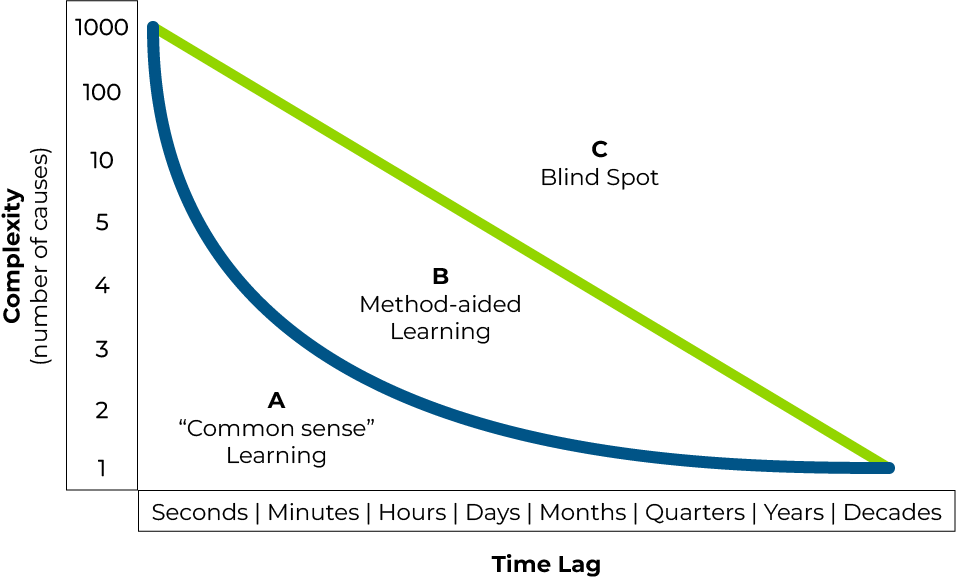

The more complex the problem, the harder it is to distil a useful model from the data. Two key factors that drive complexity are

The number of drivers: if you have more than one, you cannot even look at correlations. If you want to use modelling, you need (in theory) exponentially more data the more causes you have.

The time it takes for an action to produce an effect: the longer it takes to see the effect of a cause, the harder it is to collect enough data and also to use the data to find the true time lag.

In my 2014 book, The End of the KPI Illusion, I created this matrix from these two dimensions. "Intelligent trial and error" or more advanced "scientific experimentation" is how we learn in real life. It only makes sense when there are few causal drivers active. The higher the time lag, the fewer drivers we can accept.

When it gets more complex, we can use Causal AI to perform method-based learning. This is zone B in the diagram below.

Then there is a space where the dimensionality becomes too high or the time lags too large. This space is difficult to grasp even with machine learning. It is a space that is managed with intuition and risk management.

This insight should make us humble enough to realise that what we are after is not the ultimate truth. It is only to enable more useful decisions with insights.

Although causal AI is not a push-button method, can produce sub-optimal results if applied incorrectly, and requires some training to use, it is still the best way to gather evidence for decision making. Here is why.

Causal AI: Not a magic pill, but a magic effect

Causal AI is a modelling methodology that builds models (= mathematical formulas) of cause-effect relationships between the variables in a dataset. It is designed to answer 'why' questions. Do you want to do quantitative research and are you looking for the "why"? Then your job is to know the factors that best influence results.

You cannot use descriptors, compare data or do correlation analysis for this, simply because outcomes have multiple causal drivers. A univariate view is blind to the truth, which is multidimensional. If you look further, you will also soon learn that standard statistical modelling has serious limitations that make it not only impractical but also highly biased.

The application of Causal AI provides unbiased insights, uncovers hidden non-linearities and interactions, and ultimately results in models that produce predictions that are more accurate and stable over time. These models eliminate the inherent discrimination of AI, model drift and spurious results.

I wrote above about the limitations of causal AI. The method (like any modelling, really) needs a user who knows the context of the data inside out to put it in the right perspective. Overall, the stakes of using it are lower than with conventional statistics. Only "old school" modellers need to learn a new paradigm. Examples: Causal AI does not care about significance, but about relevance, or it does not work as an input-output model, but as a network model.

The technology has been around for about 10 years and has already created billions in value for the company. It provided the insight that led to T-Mobile's "un-carrier strategy", the beginning of a legendary growth story.

Don't look for a magic pill.

Don't be lazy. Instead, gather the best insights available, make decisions, and repeat.

That’s all you need to 10x your insights.